Secure your AI project

A Unified API

Agile and Budget-Friendly

Equipped with content filters, scoped response control, and integrated feedback loops to ensure precise answers.

Track performance and usage trends monthly, quarterly, and annually to maximize AI cost efficiency.

Limit tokens and requests by user or team, prevent overload queries, and receive early alerts to optimize costs and maintain system reliability.

Leverage Redis or Memcached to store frequently accessed query results, minimizing inference load, improving latency, and optimizing user experience.

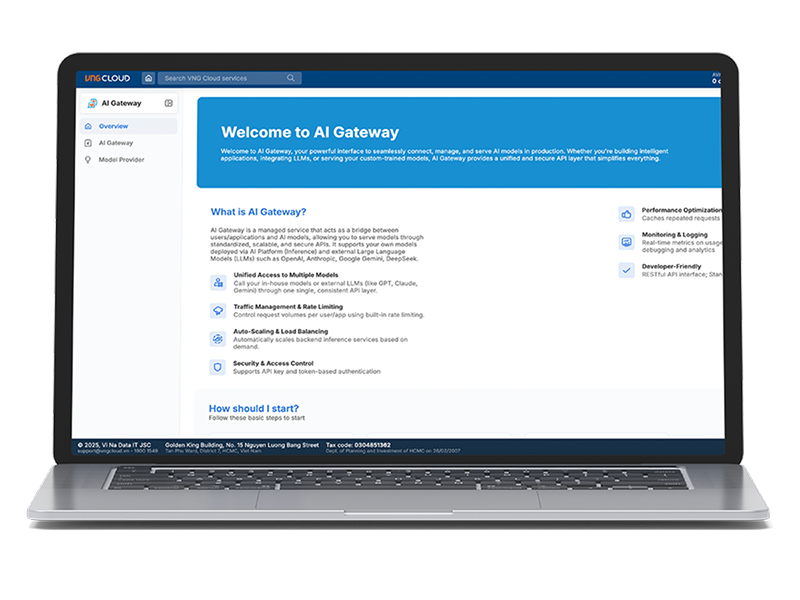

Easily connect and manage multiple LLMs from various providers via one seamless API.

Easily integrates with third-party payment systems and automates transactions based on actual usage, ensuring seamless and efficient project operations.

Ensure secure data access across multiple sources with real-time AI query monitoring to detect and mitigate security threats.

Manage everything from a single, unified interface—no more manual API integrations. Automatically orchestrate requests across LLMs like GPT-4, LLaMA, and Mistral to select the best-fit model for each context.

Lower system load, boost response speed, and cut AI costs—while auto-scaling ensures consistent performance during traffic spikes.